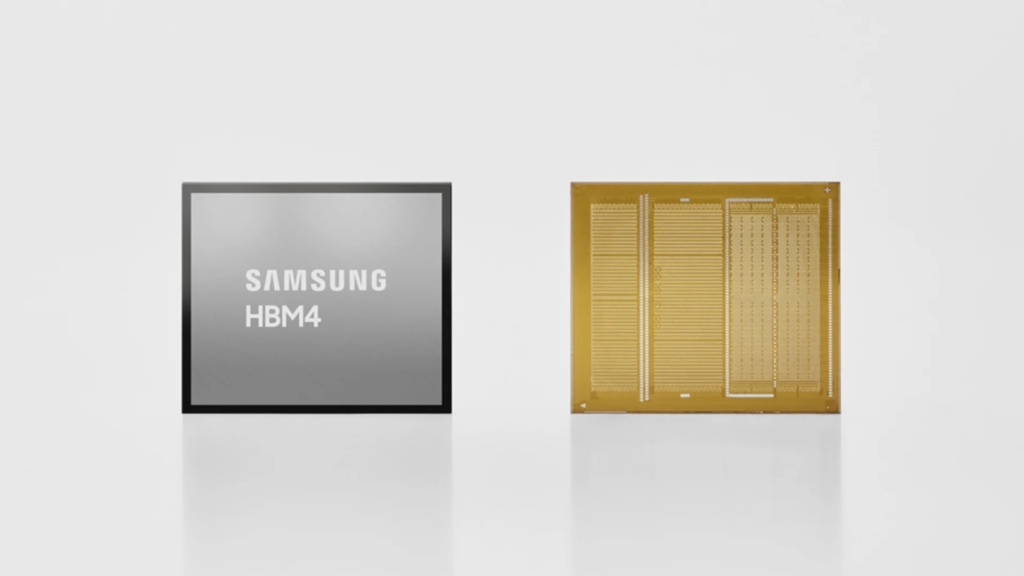

Samsung HBM4 mass production

Samsung Electronics says it has begun mass production and shipments of its next-generation high-bandwidth memory chip, HBM4, in a move the company is presenting as a major step in the intensifying global race to supply the booming artificial intelligence market.

The South Korean tech giant said the new AI memory chip is designed to reduce data bottlenecks in next-generation data centres and AI computing systems, as demand for high-bandwidth memory rises on the back of generative AI models and rapid expansion in AI infrastructure.

Samsung’s announcement lands at a time when memory makers are battling for position in a segment dominated by a few players and driven heavily by orders tied to AI accelerators. In recent years, high-bandwidth memory has moved from a specialist product into a core component of the AI supply chain, because it helps AI processors handle massive volumes of data at very high speeds.

What Samsung said it is producing and shipping

In its statement, Samsung said mass production has commenced for HBM4, with a consistent transfer speed of 11.7Gbps and capability of up to 13Gbps peak speed, positioning the product as a performance upgrade over its previous generation.

Samsung also said the HBM4 product uses a 4nm logic base die, a detail meant to signal improvements in performance, reliability and energy efficiency for demanding AI workloads in modern data centres.

In practical terms, Samsung is selling this as a “traffic control” solution for AI: when AI accelerators are starved of fast memory bandwidth, computing power gets wasted. That is one reason HBM has become one of the hottest battlegrounds in semiconductors.

Samsung HBM4 mass production is therefore not just a factory milestone. It is a bid to win more design slots with the world’s biggest AI chip customers, including those building next-generation servers and accelerators.

The competitive backdrop

Samsung HBM4 mass production is happening under intense pressure from rivals. Reuters reported that competition is tightening as companies such as SK Hynix and Micron push to secure supply positions for the next wave of AI systems, and that HBM4 is already a focal point of that contest.

This matters because the HBM market is high-margin and reputation-driven. Once a supplier is qualified for a major platform, it can shape multi-quarter demand and influence how quickly that supplier’s other memory products gain traction. It also influences investor confidence: Reuters noted Samsung shares jumped after the announcement, reflecting how much weight markets attach to HBM momentum.

Samsung has been publicly upbeat about the outlook. A Samsung semiconductor executive told Reuters this week that memory chip demand should remain strong through 2026 and into 2027, largely driven by AI growth, while also describing customer feedback on Samsung’s upcoming HBM4 as “very satisfactory.”

https://ogelenews.ng/samsung-hbm4-mass-production-ai-memory-chip

Why HBM4 is being called “AI memory”

Samsung HBM4 mass production is being framed as an “AI memory” story because HBM is now tightly coupled with the AI compute cycle. AI accelerators require memory that can keep pace with their throughput, and HBM stacks memory vertically to deliver high bandwidth in a compact footprint.

As AI models grow in size and inference volumes rise, bandwidth constraints become a performance limiter. That is why suppliers highlight transfer speeds, stack capacities, and power efficiency.

Samsung HBM4 mass production also signals an attempt to close any gap in perception: the company wants customers to see it as ready to supply the most advanced AI memory at scale, not just announce prototypes, Samsung HBM4 mass production.

What comes next on Samsung’s roadmap

Samsung said it plans to provide samples of HBM4E later in the year and laid out a broader roadmap beyond HBM4.

That roadmap matters to customers because the AI supply chain plans far ahead. Data centre operators and chip designers want predictable ramps: today’s qualification decisions can become next year’s production commitments.

Samsung HBM4 mass production, in that sense, is also a signal to customers: “we are producing now, and we are already preparing what comes after.”

The bottom line

Samsung starts mass production of next-gen AI memory chip at a moment when AI infrastructure spending is reshaping the memory industry. The headline product here is HBM4, and Samsung is pushing two messages at once: improved speed and readiness to ship at scale.

Whether Samsung can convert this ramp into a bigger share of the most valuable HBM supply deals will depend on qualification outcomes, steady yields, and customer adoption in next-generation AI systems. But the direction is clear: the AI era is forcing memory makers to compete like platform companies, not commodity suppliers.

Samsung HBM4 mass production will be watched closely because whoever wins HBM share often wins the narrative, the pricing power, and the next round of AI memory orders.